Today, the FTC released a staff report with OT staff’s findings from the agency’s study into large AI Partnerships and Investments.

The last two years have seen the creation or expansion of three partnerships between the largest cloud service providers (“CSPs”) and prominent AI developers: Microsoft-OpenAI, Amazon-Anthropic, and Google-Anthropic. These partnerships have included more than $20 billion in cumulative financial investment[1] and substantial non-monetary value exchange.[2] Notably, each of the three CSP partners also develops AI models and products.

In January 2024, the agency launched a market study into these partnerships using its 6(b) authority to better understand the partnerships and their potential implications for competition and consumers.

The report provides important insights regarding key partnership terms, as well as how the partnerships could potentially: impact access to certain inputs to AI development, such as computing resources and engineering talent; increase contractual and technical switching costs for the AI developer partners; and provide CSP partners access to sensitive technical and business information that may be unavailable to others.

This blog post first describes some of the agency’s work and events that led to today’s report into large AI partnerships and investments, then describes the findings of the study, and concludes with questions for future exploration.

Public reporting and agency initiatives underscored potential concerns and the need for more access to information about these partnerships

Throughout 2023 and 2024, participants in various FTC events and initiatives shared perspectives about the dynamics of the AI ecosystem, including the relationship between cloud providers and AI developers across the ecosystem and these three partnerships in particular.[3] These events and initiatives included a Cloud Computing RFI in March 2023, a Cloud Computing panel in May 2023, and an FTC Tech Summit in January 2024 on issues related to AI, cloud computing, and competition—with staff later producing multiple quotebooks and a blog post documenting what we heard from participants and the public.

One panel participant, a reporter from The Information, described the concerns of an AI startup regarding the partnerships: “We raise money on this idea, but now OpenAI, which has $13 billion of funding, is going after the same thing. How are we supposed to compete against that?”[4] Panelists discussing hardware and infrastructure examined the role of the three largest cloud service providers in the AI ecosystem. For instance, a Professor of Law at Vanderbilt University opined that such a dynamic could lead to “less innovation in the model or application layers” because large “vertically-integrated” CSPs “can incorporate those new ideas directly into their own offerings.”[5]

Concerns about the potential impacts of these AI partnerships on consumers and competition led the Commission to initiate a study using its 6(b) authority, which allows it to request non-public information from entities to inform its mission.[6]

As the Chair wrote when the study was launched, “History shows that new technologies can create new markets and healthy competition. As companies race to develop and monetize AI, we must guard against tactics that foreclose this opportunity. Our study will shed light on whether investments and partnerships pursued by dominant companies risk distorting innovation and undermining fair competition.”[7]

Staff report describes extensive exchange between partners across chip, data, model, and application layers

The report provides insights to the public on key partnership terms. Among other provisions, the partnerships include:

- Significant equity and certain revenue-sharing rights;

- Billions of dollars in cloud commitments;[8]

- Certain consultation, control, and exclusivity rights in the AI developer partners;

- The provision of large amounts of discounted computing resources;

- Sharing of key technical and business information;

- The potential for exchange of talent and data between partners; and

- Opportunities to expand current cloud and AI products, including through the prospect of integration into partner CSPs’ products.

In the words of one CSP partner, information-sharing amounted to a “multi-year crystal ball into the future needs of AI infrastructure.”[9]The Commission’s 6(b) report also describes how one respondent believed that only a small number of players could scale past the capabilities of current state-of-the-art models, and that partnerships between AI developers and CSPs were an important way for model developers to keep ahead of the pack.[10]

For more information regarding the terms of the partnerships, see the agency’s press release or the executive summary of the report.[11]

Using these aggregated findings, staff outlined some areas to watch:[12]

The partnerships could affect access to certain inputs, such as computing resources and engineering talent: For instance, the report describes the potential that a CSP partner might consider limiting computing resource inputs for both partner AI developers and non-partner AI developers. Computing resources are a key input for generative AI developers, and an inability to access them could impact both current AI developers and potential future entrants. In internal documents, one CSP partner employee wrote that “we face a problem today where scarce GPU resources are being disproportionately used by a few large customers who are getting steep discounts (up to [REDACTED] discounts on [REDACTED]) is [sic] driving hoarding behavior.”[13] Another open question is whether the partnerships may consolidate access to the AI engineering talent pool in the hands of a limited number of firms.

Computing resources have been in demand for over a century: Here, mathematician Arthur Cayley receives a grant in 1862 for “computing resources” in the form of two assistants. Source 1 and 2.

The partnerships could increase contractual and technical switching costs for AI developer partners: The partnerships might affect AI developer partners by restricting their use of multiple CSPs or by making it more difficult for them to change CSPs. For example, the partnerships all reportedly included billions of dollars in cloud computing spending commitments by the AI developer partners.[14] Multiple partnerships also have provisions that impose conditions or restrictions on the ability of AI developer partners’ ability to operate with other CSPs or companies.[15] In addition, the report describes potentially lengthy migration times between AI-specialized cloud services and AI chips, technical barriers that may make it difficult to switch to another CSP.[16]

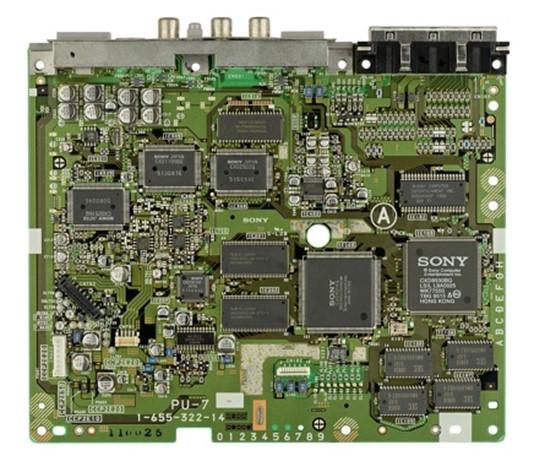

Image

The first Sony PlayStation motherboard, which contained one of the progenitors of the modern GPU. The sophisticated nature of current GPUs can make switching AI chips difficult.[17] Source.

- The partnerships provide CSP partners access to sensitive technical and business information from AI developers that may be unavailable to others: This can include generative AI models, AI development methods, confidential chip co-design plans, and customer usage and revenue numbers. This information could potentially be used to develop the CSPs’ own internal models and applications, some of which may compete with those of their partners. CSP partners also receive financial and strategic business information—including non-public, potentially sensitive information—through the partnerships as well as through their role as platforms. In internal documents, one CSP highlighted: “If we wait for our own models to mature, we risk not participating in developing the necessary IP to build, operate, and secure these applications. By partnering with [REDACTED], we can more quickly learn the ‘art’ of refining and reinforcing these types of models.”[18]

Questions for future exploration regarding the role of the three largest CSPs and large AI developers and the AI ecosystem more broadly

While the 6(b) study provided important insights regarding these specific partnerships, there continue to be broad areas for future exploration relating to AI, competition, and consumer protection, including:

- How have non-partner AI developers and competition among AI developers more generally been impacted by data advantages gained by firms with access to large datasets (e.g., via in-house user-generated content, licensing deals with rights holders, etc.)?[19]

- What switching costs apply to AI chips other than non-interoperable chip programming languages (e.g., via contracts, technical options, or otherwise)?

- What are the potential impacts of certain cloud contract features such as egress fees, committed spending, and cloud credits? What alternative contract terms and pricing models exist or are possible?

- How do CSPs share information between their AI development arms, investment divisions, and cloud teams for the purpose of strategic planning? What other potential advantages might the CSPs gain by their triple position as an investor, intermediary, and market participant?

- How might CSPs use potential technical advantages described in the report to benefit their non-AI cloud services? For example, could AI-specific chip or data center improvements impact other cloud services provided by the order recipients

- How might CSPs steer cloud customers to the CSPs’ own AI models above third-party models, including those of their AI developer partners?

The FTC will continue to use all of its tools, authorities, and the laws on the books to address AI market dynamics that may pose risks to consumers and competition. This is illustrated by the FTC Programmatic Advances Fact Sheet describing the agency’s many enforcement, rulemaking, and research activities in recent years. These actions are a good reminder that our nation’s laws apply to all technologies, regardless of how novel or complex they may seem.

***

Thank you to the Office of Technology and FTC Staff who led and contributed to this matter, including but not limited to: Noam Kantor, Ben Swartz, Nick Jones, Amritha Jayanti, Stephanie Nguyen, and Mark Suter.

1 Report at 4.

2 These value exchanges were reported at the time, but understanding their full extent was one motivation for the agency’s launch of the present 6(b) study, as described below and in the report.

3 The FTC launched a Cloud Computing RFI in March 2023, hosted a Cloud Computing panel in May 2023, and held an FTC Tech Summit in January 2024 on issues related to AI, cloud computing, and competition, later producing multiple quote books and a blog post documenting what we heard from participants and the public.

4 Stephanie Pallazolo participated in the Technology Summit on AI on January 25, 2024. Tech Summit on Artificial Intelligence: A Quote Book | Data and Models.

5 Ganesh Sitaraman participated in the Technology Summit on AI on January 25, 2024. Tech Summit on Artificial Intelligence: A Quote Book | Hardware and Infrastructure Edition: Semiconductor Chips and Cloud Services

6 The FTC has exercised its 6(b) authority in the public interest throughout its history to conduct wide-ranging studies that do not have a specific law enforcement purpose, including by studying partnerships between firms.

8 I.e., AI developer partners have agreed to spend a significant and set amount of investment money on cloud services from their CSP investor, sometimes over a prescribed amount of time.

9 Report at 25.

10 Report at 21.

11 Report at 1.

12 See generally Report at Section 5. As discussed in the report, the narrow scope of this 6(b) study precludes a comprehensive economic or legal analysis of the partnerships.

13 Report at 30.

14 Report at 20.

15 Report at 33.

16 Report at 34.

17 Report at 34.

18 Report at 36.

19 Note that FTC staff has recently highlighted the ways in which some strategies involving the acquisition of data for AI training may be unlawful: AI (and other) Companies: Quietly Changing Your Terms of Service Could Be Unfair or Deceptive